Difference between revisions of "OCEOS"

imported>Dtimofejevs |

imported>Dtimofejevs |

||

| Line 1,364: | Line 1,364: | ||

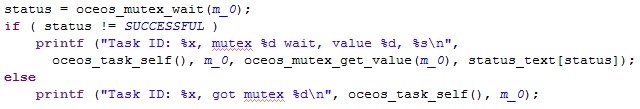

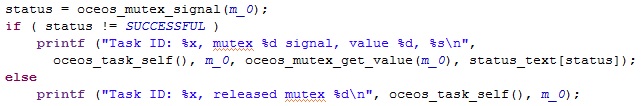

This directive releases a mutex from a job. It will cause a reschedule and if a higher priority job has been waiting on the mutex it will start causing this job to be pre-empted. | This directive releases a mutex from a job. It will cause a reschedule and if a higher priority job has been waiting on the mutex it will start causing this job to be pre-empted. | ||

[[File:oceos_mutex_signal.jpg]] | |||

Revision as of 00:36, 25 September 2020

Introduction

OCEOS is a pre-emptive real time operating system (RTOS) with a small memory footprint intended for hard real time systems that use the GR716 micro-controller [ref.].

It was developed by O.C.E. Technology with support from the European Space Agency (ESA) under project 4000127901/19/NL/AS.

This document describes the features and use of OCEOS, and details its behaviour and system calls.

Applicable and reference documents

Applicable Documents

| ID | Title | Version |

|---|---|---|

| E40 | ECSS Space engineering – Software, ECSS-E-ST-40C | 06/03/09 |

| Q80 | ECSS Space product assurance – Software Product Assurance ECSS-Q-ST-80 | 15/02/17 |

| SRS | Software Requirements Specification, (OCEOS RTOS). OCE Technology | 06/20 Version 3.0 |

| ICD | Interface Control Document, (OCEOS RTOS). OCE Technology, OCE-2018-ICD-001 | 05/12/18 Rev 2.0 |

Reference Documents

| ID | Title | Version |

|---|---|---|

| BAK91 | T. P. Baker. Stack-based scheduling of real-time processes. Journal of Real-Time Systems, 3, 1991. http://www.math.unipd.it/~tullio/RTS/2009/Baker-1991.pdf | 1991 |

| GRLIB | GRLIB IP Library User’s Manual | Apr 2018, Version 2018.1 |

| IRD-GR716 | GR716 LEON3FT Microcontroller, 2018 Advanced Data Sheet and User’s Manual, GR716-DS-UM, Cobham. | Nov 2018 Version 1.26 |

| SDD | Software Design Document, OCE-2020-SDD-001 | Rev 3.1, 01/07/2020 |

| QR | OCEOS-QuickReference.pdf | Rev 1.0 |

Terms, definitions and abbreviated terms

action:

performed at a specific time, in OCEOS either moving a job to the ready queue or outputting a value to an address.

activate a task:

please see ‘start a task’

alarm:

a trap caused by a counter reaching a certain value.

application program interface (API):

describes the applications interface with OCEOS, usually referred to here as ‘directives’.

area:

refers to a part of memory reserved for OCEOS internal data. OCEOS uses three such areas, the fixed data area for information that does not change after all tasks etc. are created, the dynamic area for information about tasks etc. that changes as scheduling takes place, and the log area, primarily for information about any anomalous conditions detected including the system state variable and the system log. The start addresses of the three areas are given by the application developer and must be 32-bit word aligned. Starting from these addresses each area is created by OCEOS as a block of 32-bit words that starts with OCEOS_VERSION followed by the count in words of the area size and ending with END_SENTINEL. The size of each area depends on the characteristics of the application such as number of tasks, mutexes, etc. and does not change once set up. OCEOS provides a header file oceos_areas.h which makes field offsets in each area and area sizes available as defined constants if certain application characteristics are pre-defined. Pointers to the starts of the various fields in each area are provided in the system meta structure referenced by sysMetaPtr. In addition to these areas OCEOS uses the system stack and a small amount of space on the heap. OCEOS does not use dynamic memory allocation calls such as malloc()

atomic:

an operation which once started does not allow other operations acquire the CPU until it completes.

counter:

an unsigned integer typically updated by an interrupt or system call. Note: in OCEOS a counter may involve 8 bits, 16 bits or 32 bits. Note: in OCEOS a counter may not wrap around through zero but remains at its maximum/minimum value when this has been reached.

counting semaphore:

used to synchronize different tasks. It has a 32-bit counter, a create() operation used only in system initialisation, three atomic operations, signal() which increments the counter, wait_continue() and wait_restart() which decrement the counter unless it is already zero, a value() operation that returns the current counter value, and a pending jobs queue of pending jobs placed there as a result of a wait_restart() operation done when the counter was already zero.

Note: Signal operations and wait operations on a semaphore usually occur in different tasks.

Note: In OCEOS signal() causes the semaphore counter to increment and all jobs on its pending jobs queue to transfer to the ready queue in the order of their arrival on the pending jobs queue. This is followed by a call to the scheduler. A status code is returned for signal() that indicates that it has succeeded or indicates an error condition such as an attempt to transfer pending jobs when the ready queue is full.

Note: In OCEOS waiting on a semaphore has two options, wait_continue() and wait_restart(). If the semaphore is non zero both options behave identically, the semaphore counter is decremented, a successful status code returned, and the job continues. If the semaphore counter is zero wait_continue() leaves the counter unchanged at zero but returns an unsuccessful status code and the job continues taking this status into account. The wait_restart() operation allows a timeout be specified. If the semaphore counter is zero, wait_restart() checks if the job was started as a result of a timeout on this semaphore and if so behaves like wait_continue(), leaves the counter unchanged, returns an unsuccessful status code indicating that the wait has timed out, and the job continues taking this into account. If the semaphore counter is zero and the job did not start as a result of a timeout on this semaphore wait_restart() leaves the counter unchanged but terminates the job after placing a pending version of it on the semaphore’s pending jobs queue and if a timeout is specified also on the timed actions queue. The job will restart after the semaphore is signalled or after a timeout if a timeout was specified. If no timeout is specified the job remains on the semaphore’s pending jobs queue indefinitely until some other job signals the semaphore.<

N.B. In OCEOS a job does not block at the point where it does a wait operation on a zero semaphore, depending on the option chosen it either continues or terminates and restarts later. If a job uses the restart option, any local data at that point that is to be available after the job restarts needs to be stored globally as local data is lost when the job terminates.

critical section:

a sequence of instructions that should not be in use by more than one task at a time.

Note: Usually protected by a mutex that is locked at the start of the critical section and released at the end.

Note: Critical sections should be as short as possible.

data queue:

a queue of non-null void pointers used to exchange data between tasks and to synchronize tasks. It has a create() operation that is only used during system initialisation and three atomic operations, write() which places a non-null pointer on the queue, and read_continue() and read_restart() which read a pointer from the queue if the queue is not empty. It also has a dataq_get_size() operation that returns the number of pointers on the queue, and a pending jobs queue of pending jobs placed there as a result of a read_restart() operation when the queue was empty. Note: Write operations and read operations on a queue usually occur in different tasks.

Note: If the queue is not full OCEOS write () causes a non-null pointer to be added to the queue and all jobs on its pending jobs queue to transfer to the ready queue in the order of their arrival on the pending jobs queue. This is followed by a call to the scheduler. A status code is returned that indicates successful or that an error condition has occurred such as an attempt to write a null pointer or write to a full data queue.

Note: In OCEOS reading a queue has two options, read_continue() and read_restart().

If the queue is not empty both versions behave identically, the first element on the queue is returned and the job continues. If the queue is empty read_continue() returns a null pointer and the job continues, taking the empty queue state into account. The read_restart() operation allows a timeout be specified. If the queue is empty read_restart() checks if the job was started as a result of a timeout, and if so behaves like read_continue(), returns a null pointer and the job continues taking the empty queue timeout into account. If the queue is empty and the job did not start as a result of a timeout read_restart() terminates the job after placing a pending version of it on the data queue’s pending jobs queue and if a timeout is specified also on the timed actions queue. The job will restart after the queue is written or after a timeout if a timeout was specified. If no timeout is specified in read_restart() the job remains indefinitely on the this data queue’s pending jobs queue until some other task adds an element to this data queue.

N.B. In OCEOS a job does not block at the point where it does a read operation on an empty queue, depending on the option chosen it either continues or terminates and restarts later. If a job uses the restart option, any local data at that point that is to be available after the job restarts needs to be stored globally as local data is lost when the job terminates.

deadlock:

a scheduling condition where jobs cannot proceed because each holds a resource the other needs.

Note: In OCEOS deadlocks cannot occur.

directive:

This is an individual function with an interface to the external application software (ASW). These offer control over tasks, message queues, semaphores, memory, timers etc.

dynamic area:

area used by OCEOS for internal data that changes as part of normal scheduling operations. It contains information such as e.g. the current system priority ceiling, states of tasks, mutexes etc. It is set up and initialised by oceos_start() based on the fixed area information passed to it. Please also see ‘area’ above.

error handling:

actions taken when the operating system detects that a problem has occurred.

Note: In OCEOS this typically involves one or a combination of returning an appropriate status code, making a system log entry, updating the system state variable, calling a user defined problem handling function. If called the problem handling function can use the log and the system state variable to determine the action that should be taken.

error hook:

a reference to the user defined function called when an abnormal condition is detected.

event:

in some OS a job can suspend its own execution until a specified event occurs. In OCEOS an active job may be pre-empted by a higher priority job but never suspends itself.

Note: In OCEOS similar functionality is provided by wait_restart() on a counting semaphore that is signalled later by an interrupt handler.

fixed area:

area used by OCEOS for internal data that is defined in initialisation and as tasks, mutexes etc. are created and does not change once scheduling has begun. It contains information such as e.g. number of tasks, task priorities, priority ceilings of mutexes, etc. The area is initialised by oceos_init() and finalised after all tasks etc. have been created by oceos_init_finish(), which adds an XOR checksum in the penultimate word. The fixed area is passed to oceos_start(), which begins scheduling. Please also refer to ‘area’ above.

hook:

a reference to a user defined function called by the operating system in certain conditions.

idle job:

an execution instance of the unique lowest priority task, unlike other jobs may run indefinitely. Typically this ‘idle job’ is not idle, but used for system monitoring and initiating system correction activities. To save power it may put the CPU in sleep mode waiting for interrupts. If no idle job is present OCEOS puts the CPU in sleep mode when all jobs are finished.

initialisation code:

This code executes when the CPU is reset and before main() is called. It is part of the BCC/BSP system used with the GR716 [IRD-GR716]. It is not part of OCEOS.

interrupt:

a form of trap caused by a non-zero input to the CPU from the interrupt controller due to a change in state of one of the external links connected to the interrupt controller. Please refer to ‘trap’.

interrupt handler:

please see ‘trap handler’.

interrupt latency:

the time between the occurrence of the external interrupt condition and the execution of the first instruction of the interrupt handler. The interrupt controller on the GR716 makes this time available to the application software for a number of interrupts.

interrupt level:

the interrupt priority as determined by the CPU architecture and the interrupt controller.

interrupt service routine:

the trap handler associated with the interrupt.

job:

an execution instance of a task created as a result of a request to start a task. Such a request can occur before a job created by a previous request has completed execution, or even begun execution, so a task can have a number of jobs waiting to execute. The maximum allowed number of such jobs for a task is specified when the task is created. A request to start a task is accompanied by a pointer which is passed to the job allowing different execution instances of the same task act on different data. All jobs must complete in finite time with the exception of the idle job. In OCEOS a job is ‘pending’ if it has not yet started execution and ‘active’ if it has started execution. Only pending jobs are placed on pending jobs queues of semaphores or data queues or on the timed actions queue. Active jobs can be pre-empted by higher priority jobs but otherwise run to completion and are never on pending jobs queues or on the timed actions queue. Data stored for each job includes origin, creation time, activation time, execution time, time to completion, and number of pre-emptions. This job data is used to update the task data when a job terminates.

kernel mode:

The CPU mode in which all instructions are available for use and all physical memory addresses accessible.

log area:

OCEOS memory area used to store system state information that is only infrequently updated in normal operations, including the system state variable and the system log. As this area is usually accessed only when a problem occurs, it may be stored in relatively slow external memory without impacting performance, and if this is non-volatile can preserve aspects of OCEOS state across power cycles. Please also refer to ’area’ above.

manager:

A conceptual group of directives of similar functionality, one set of directives offering functionality and access to resources in the same logical domain. Managers may be core (contained in every OCEOS configuration) or optional (selected or otherwise by the application developer).

module:

OCEOS is structured as independent modules responsible for different OS features. If a component is not required the associated module is omitted at link time. Core modules are always present.

mutex:

a binary semaphore used to provide mutual exclusion of jobs so that only one job at a time can access a shared resource or critical code section. A mutex has two states, locked and unlocked, a create() operation only used in system initialisation, two atomic operations, wait() to lock and signal() to unlock, and a priority ceiling value set when the mutex is created by the application to be the priority of the highest priority task that uses the mutex. A value() operation allows its current state be read.

Note: The wait() and signal() operations on a mutex should occur in pairs in the same task.

Note: The number of instruction executions between the wait() and the signal() must be finite and should be as short as possible.

Note: In OCEOS a higher priority job is blocked from starting if a mutex is locked that might be needed for that job to finish. Once a job has started, any wait() it performs on a mutex will succeed in locking the mutex, it would not have started were the mutex unavailable.

Note: The application developer is responsible for associating a mutex with a shared resource and ensuring it is used whenever the shared resource is accessed.

Note: A mutex does not have an associated pending jobs queue. The scheduler will not allow any job start if an already locked mutex might have to be returned in order for the job to finish, such jobs remain on the ready queue.

Note: The mutex is locked by wait(). This may change the current system priority ceiling. No job that in order to finish might need the mutex will be started by the scheduler. The mutex is unlocked by signal(). This may reduce the current system priority ceiling and may result in the scheduler pre-empting the current job.

mutual exclusion:

if a task accessing a complex structure is pre-empted before it has finished with the structure the structure may be in an inconsistent state, leading to problems if it is accessed by another task. It must be possible for a task to exclude other tasks that use that resource from executing. Please see ‘mutex’.

origin:

this indicates whence a pending job came when it was transferred to the ready queue. The job may have just been created, or have been transferred from a pending jobs queue as a result of a counting semaphore being signalled or data queue being written, or as a result of a timeout.

pending jobs queue:

this is either a FIFO queue of pending jobs, or part of a priority queue of actions. Each counting semaphore and each data queue has a FIFO based queue of pending jobs. The timed actions queue that holds pending jobs is a priority queue based on activation time.

Note: In OCEOS a mutex does not have a pending jobs queue.

pre-empt:

the scheduler pre-empts the processor from the currently running job when a job with higher priority than the current system priority ceiling is ready to start.

priority ceiling:

each mutex has a priority ceiling equal to the priority of the highest priority task that uses it.

Note: OCEOS will only start a pending job if its priority is higher than the priority ceilings of all currently locked mutexes.

priority ceiling protocol:

an approach to scheduling and to sharing resources.

Note: OCEOS is based on an extension of this, the stack resource policy [BAK91].

priority queue:

the order in which elements are removed is based on their priorities rather than on the order in which they arrived on the priority queue. In OCEOS the ready queue is a priority queue based on job priority and order of arrival, the timed actions queue is a priority queue based on time.

queue:

A first in first out (FIFO) queue such as the ‘pending jobs queue’ or ‘data queue’.

ready queue:

a priority queue of jobs with priority based on job priority and within priority on order of arrival.

Note: In OCEOS this holds references to pending jobs which have not yet started running, to active jobs that have been pre-empted, and to the currently executing job. A job is removed from the ready queue when it terminates.

Note:

The scheduler puts the highest priority job into execution on the processor if and only if its priority is higher than the current system priority ceiling.

resource:

usually a reference to a shared resource that can be accessed by different tasks.

Note: Shared resources give rise to many potential problems, please see ‘mutex’.

running:

the running job is the job whose instructions are currently being executed on the processor.

Note: In OCEOS an active job is running unless interrupted or pre-empted, it is never in a suspended state waiting on a resource (other than the CPU).

scheduler:

a central part of OCEOS that determines which job should be executed on the processor. In order to allow another job begin the scheduler may pre-empt the processor from the currently executing job, this stays on the ready queue for resumption later.

scheduling policy:

determines whether the currently executing job should be pre-empted.

Note: In OCEOS this is based on the stack resource policy with fixed priority tasks and single resources.

Note: In OCEOS pre-emption occurs if and only if the priority of the new job to be started is higher than the current system priority ceiling.

semaphore:

please see ‘counting semaphore’ and also ‘mutex’.

sleep mode:

activities in the CPU are powered off except those required to monitor external interrupts. When an interrupt is detected the CPU vectors to the corresponding interrupt handler and resumes normal execution.

software component:

system settings data, initialisation code, trap handler code (both OCEOS and application), application tasks and applicable OCEOS modules.

stack resource policy:

an extension of the priority ceiling protocol that makes unbounded priority inversion, chained blocking and deadlocks impossible and allows all tasks to share a single stack.

start a task:

create an execution instance of the task (i.e. a job), put this job on the ready queue, and call the scheduler.

Note: if the task has higher priority than the current system priority ceiling the scheduler will pre-empt the current active job and place the new job into execution.

Note: if the number of current jobs for this task has already reached this task’s jobs limit, a new job will not be created and an error will be reported.

Note: In OCEOS after a job is created it remains in a ‘pending’ state until it is first put into execution on the processor, becoming ‘active’ once it has started execution.

suspended:

no execution instances of the task are currently present

shutdown hook:

address of routine called to shut down the system

system log:

stored in the OCEOS log data area whose start address is given by the application developer. Structured as an array of 64-bit log entries. The maximum number of log entries, which should be in the range 16 to 1024, is given by the application developer. The log is treated as a circular buffer and the application developer can define a function to be called when the log becomes ¾ full. Log entries are preserved across system reset and if stored in non-volatile memory across power on-off cycles. See also dynamic data and fixed data

system priority ceiling:

an integer in the range 0 to 255, set to 255 (lowest priority) at system start. An execution instance of a task (i.e. a job) can only start executing if its priority is higher than the current system priority ceiling.

Note: Its value is determined by the pre-emption threshold of the currently executing job and the priority ceiling of any mutex held by that job. When a job starts, the system priority ceiling is changed to the pre-emption threshold of the job, returning to its previous value when the job terminates. When a job obtains a mutex, the value is changed to the priority ceiling of the mutex if this is higher priority than the current system priority ceiling, returning to its previous value when the mutex is released.

system priority ceiling stack:

used to hold successive system priority ceiling values on a LIFO basis, initialized to hold the value 255 (lowest priority).

Note: not to be confused with the system stack

Note: In OCEOS this is a byte array with up to 256 entries (64 words), depending on the number of tasks in an application.

system state variable:

this indicates whether various warning or error conditions have occurred. When set by OCEOS a user defined problem handling function may be called, depending on the condition. This variable can be accessed by the application at any time.

task:

an application is structured as relatively independent tasks and interrupt handling routines. Each task has a fixed priority and fixed pre-emption threshold and a fixed limit to the number of its execution instances (‘jobs’) that can exist simultaneously. Each task has an associated principal function that is called when a job starts, and an optional termination function that can be used to terminate task execution in an orderly way if a task has to be aborted. A flag indicates whether use is made of floating point hardware, but saving and restoring any floating pointer registers used is done by BCC, not by OCEOS. A pointer is passed to the task which can be used to select the structure processed by the task. The task pre-emption threshold facilitates avoiding context switches for task with very short execution times, and also allows task operation be made atomic in relation to other tasks. A task can be enabled or disabled, in the disabled state attempts to put it into execution fail but are logged. OCEOS can be asked to put a task into execution (create a job) before a previous execution of the task has completed, or even begun, please see ‘job’ above. Data stored for each task includes number of times executed, maximum number of current execution instances, maximum number of times pre-empted, minimum times between execution requests, maximum activation wait time, maximum execution time and maximum time from start request to completion. This data is updated each time one of the task’s jobs completes.

task chaining:

a task can cause another task to start, optionally after a specified time.

Note: When a task starts another task it may be pre-empted by the new task.

Note: A task can cause itself to restart, but should always choose an appropriate time delay before the restart occurs to ensure lower priority tasks are not locked out.

task job:

execution instance of a task. Please see ‘job’ above.

task jobs limit:

this integer is the maximum number of current execution instances of the task (i.e. jobs) that can be present at one time. It is set when the task is created. An attempt to create more than this number of current jobs will fail and result in an error being logged and the system state variable being updated.

task pre-emption threshold:

a task’s pre-emption threshold determines the priority required to pre-empt the task.

Note: A task’s pre-emption threshold is never lower priority than the task’s priority.

Note: It can be used to avoid context switch overheads for tasks with short run times and makes a task atomic with regard to some but not all higher priority tasks.

task priority:

a measure of the urgency associated with executing a task’s instructions. In OCEOS task priorities are integers in the range 1 (highest task priority) to 254 (lowest task priority) and are fixed at compile time. More than one task can have the same priority. If a task does not terminate (e.g. the idle task) it must have the lowest task priority to avoid locking out other tasks, and should be the only task with that priority.

Note: Tasks with the same priority are executed in FIFO order. Time-slicing or pre-emption between tasks of the same priority does not occur in OCEOS.

Note: Selecting appropriate priorities for tasks is a key responsibility of the application developer. If done incorrectly tasks will miss their deadlines.

task state:

in OCEOS a task has two states, enabled and disabled. OCEOS provides service calls to switch a task between states. Execution instances of a task (i.e. jobs) can only be created if the task is enabled. Disabling a task terminates all pending jobs of that task. A task can only be re-enabled once a previous disabling has completed.

terminate:

when a job finishes execution it is said to terminate.

Note: In OCEOS every job except perhaps the idle job terminates after a finite execution time.

Note: A job usually terminates itself by exiting its principal function.

Note: In OCEOS an active job automatically terminates and becomes a pending job on the pending jobs queue of a counting semaphore or data queue and/or on the timed actions queue if nothing is available when accessing the semaphore or data queue and the restart option has been chosen. The job restarts when the semaphore is signalled or queue written, or after a timeout.

Note: OCEOS terminates all a task’s pending jobs when it disables a task.

Note: On job termination OCEOS updates task parameters such as maximum time to completion and maximum number of pre-emptions. Error conditions are logged and the system state variable updated.

timed actions queue:

a priority queue of pending actions and their associated starts times, with queue priority based on time.

It is linked to a high priority hardware timer which is set to interrupt at the time of the first action on the priority queue. After the timed actions have been carried out the timer is reset to interrupt at the start time of the earliest remaining action.

timed action:

an action to be done at a specified time involving either transferring a job to the ready queue or outputting a value to an address. Involves specifying a forward time tolerance and a backward time tolerance. When a timed action occurs this action and other actions whose forward timing tolerances include the current time are carried out. The backward tolerance allows the timer interrupt handler perform the action if the current time is later than the requested time by no more than this amount. If set to zero late actions are not performed. All late actions are logged and the system state variable updated.

trap:

causes a transfer of control to an address associated with the trap’s identity number. There are three main types of trap. Exceptions are generated by the CPU itself as a result of some exceptional condition such as divide by zero. Software traps are generated by special software trap instructions that give the trap number. Interrupts are caused by external devices.

Note: when a trap occurs this causes traps to be disabled, a trap should not re-occur until either the trap handler has been exited thus re-enabling traps or traps have been re-enabled by a special instruction.

Note: traps should be disabled for the minimum time possible to avoid missing interrupts or delaying the response to them.

trap handler:

code that takes the steps immediately necessary to deal with a trap (including interrupts).

Note: for an interrupt this code typically resets the cause of the interrupt, takes into account the times provided by the interrupt controller hardware, and may request OCEOS to start a task.

trap vector table:

traps (including interrupts) use their identity number to vector to a location in this table at which to continue execution.

Note: Using BCC vectoring can be set to the same location for all traps/interrupts, or to a separate table entry for each trap/interrupt.

user mode:

Code running in user mode must delegate most access to hardware to kernel mode software using a software trap or traps. This mode is often not used in embedded software where the application code typically requires direct access to the hardware. In OCEOS application code is expected to run in kernel mode.

wait:

please see ‘mutex’ and ‘counting semaphore’.

Note: OCEOS does not provide a wait(event) service call, wait(counting semaphore) provides a similar capability as an interrupt caused by an event can signal(counting semaphore).

waiting:

an active job state in which a job has commenced execution but is not able to continue and must wait for a resource, retaining its current stack frame and other context and allowing lower priority jobs to run.

Note: In OCEOS this state cannot occur. Waiting is done only by a pending job, i.e. a job that has not yet started execution and so has no current stack frame, such jobs are described as ‘pending’ rather than ‘waiting’. An active job, i.e. a job that has commenced execution, may be pre-empted by a higher priority job and have to wait to regain the CPU until a higher priority task finishes, but never waits for anything except termination of a higher priority job or the ending of an interrupt handling routine.

warning:

an OCEOS return value that indicates execution was not normal

Purpose of the Software

OCEOS is a SPARC V8 object library which provides directives to schedule fixed priority tasks, and supporting mutexes, semaphores, data queues, timed outputs, and interrupt handling.

Directives are provided for logging, error handling, GR716 memory protection, and GR716 watchdog controll.

External view of the software

OCEOS is provided as an object library, documentation and example source code.

The files provided include:

- This User Manual

- OCEOS object library (liboceos.a & liboceosrex.a)

- OCEOS header files (oceos.h etc.)

- Application example (asw.h, asw.c, oceos_config.h, oceos_config.c)

- UART driver example (uart.h, uart.c, oceos_config.h, oceos_config.c)

The OCEOS build and test environment requires the following:

- Build environment (compiler, linker, libraries, etc.) Download here.

- Debug software tool e.g. OCE’s DMON. Evaluation download.

Operations environment

General

Real time software is often written as a set of trap/interrupt handlers and tasks managed by a RTOS.

The trap/interrupt handlers start due to anomalous conditions or external happenings. They carry out the immediately necessary processing, and may ask the RTOS start a task to complete the processing.

The RTOS starts tasks and schedules them for execution based on priority. In hard real time systems scheduling must ensure that each task completes no later than its deadline.

Being early can also be a problem. OCEOS provides a timed output service that allows a task’s output be set for a precise time independently of scheduling.

OCEOS supports up to 255 tasks, and up to 15 current execution instances of each task. Each task has a fixed priority. There are 254 task priorities, 1 (highest task priority) to 254 (lowest task priority).

More than one task may have the same priority. Tasks of the same priority are FIFO scheduled. Time slicing between tasks does not occur in OCEOS.

In OCEOS each task has a pre-emption threshold. A task can only be pre-empted by a task with a higher priority than this threshold.

Pre-emptions and any traps/interrupts that occur will delay a task’s completion and potentially cause it to miss its deadline. Careful analysis is needed to ensure that task deadlines are always met.

OCEOS supports this analysis and allows relatively simple determination of worst case behaviour.

Problems such as unbounded priority inversion, chained blocking, and deadlocks cannot occur in OCEOS.

OCEOS provides mutexes to protect critical shared code or data, and inter-task communication using semaphores and queues.

A system state variable provides a summary of the current state of the system. Error conditions such as missed deadlines are logged and the system state variable updated. If the system state is not normal actions such as disabling a task or resetting the system may be taken.

OCEOS does not allow dynamic creation of tasks at run time. Virtual memory is not supported. Task priorities are fixed.

OCEOS is based on the Stack Resource Policy extension of the Priority Ceiling Protocol [Baker 1991].

OCEOS is provided as a library and is statically linked with an application. Services not needed by an application are omitted by the linker.

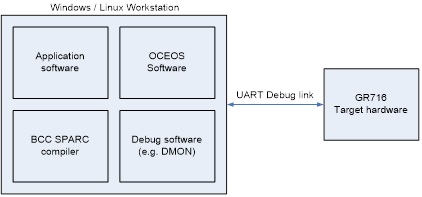

Hardware configuration

OCEOS is a library of directives that can be used to build an application to run on a GR716 microcontroller [IRD-GR716].

OCEOS and the application are expected to fit in the internal GR716 memory.

The development environment for an OCEOS application requires the following components:

- GR716 circuit board with debug serial interface connector

- PC or Linux workstation with serial interface and cable to connect GR716 debug port

- OCEOS software distribution

- BCC compiler from Cobham-Gaisler

- Debug software tool e.g. DMON from OCE

Software configuration

Software that uses OCEOS is structured as initialization code, a set of interrupt/trap handlers, OCEOS code, and a set of tasks. The specific application determines the tasks and also parts of the handler and initialization code. The interrupt/trap handlers respond to events, do the immediately necessary processing, and typically ask OCEOS to start a task to complete the processing. OCEOS then creates an execution instance of the task, a ‘job’, and either puts it into immediate execution or on the ready queue or the timed jobs queue waiting to be scheduled for execution. OCEOS schedules jobs based on their priority and may pre-empt the processor from a job with a lower pre-emption threshold before that job completes in order to start a higher priority job. OCEOS maintains a system priority ceiling based on currently locked resources and the pre-emption threshold of the currently executing job. Only jobs with higher priority than this ceiling can pre-empt. Jobs with the same priority are scheduled according to time of arrival on the ready queue. Time slicing or pre-emption between jobs of the same priority does not take place. A job may have a deadline by which it must complete. It is the responsibility of the software developer to assign task priorities so that all jobs are guaranteed to meet their deadlines. In OCEOS mutual exclusion of tasks is supported by mutexes. Counting semaphores and data queues support task synchronization and the exchange of data between jobs. OCEOS maintains a system state variable and system log. If the state variable is not normal OCEOS may call a user defined function to deal with whatever condition has occurred.

Tasks

In OCEOS all tasks are defined at compile time and each task assigned a fixed priority, a pre-emption threshold, a current jobs limit, a maximum start to completion time, and a minimum inter-job time. A task’s pre-emption threshold limits the tasks that can pre-empt it to those with higher priority than this threshold. Tasks with short run times can use this to avoid context switch overheads. Each task has two main states, enabled or disabled. OCEOS provides system calls to change task state. The state is usually enabled when the task is created, but can be initially disabled. A disabled task will not be executed, and any attempt to start it will be logged and the system state variable updated. When an enabled task is started, this creates an execution instance of the task, a ‘job’. A count is kept for each task of the number of times it has been started, i.e. total number of jobs created. Multiple jobs of the same task can be in existence simultaneously. A limit for the maximum number of these current jobs is set when a task is created, the ‘current jobs limit’. Each task has a current jobs count. This increments each time a task is started and decrements on job completion unless completion creates a further job from this task. A further execution instances of a task is not created if the current jobs count has reached the current jobs limit. Such job creation failures are logged and the system state updated. For each task a record is kept of the shortest time between attempts to create an execution instance of it, i.e. a new job, and the maximum time between these attempts. For each task a record is kept also of the maximum time a job was waiting before starting, the minimum execution time, the maximum execution time, the maximum time from job creation to completion, the maximum number of job pre-emptions. The system state variable is updated if necessary. Task chaining is supported. A job can create a new job and pass this to the scheduler or place it on the timed jobs queue. Tasks provide a start address for a termination routine for use if task execution must be abandoned.

Jobs

The request to execute a task, i.e. create a job, involves a parameter address that is passed to the job. A job can require varying times to complete, but with one exception its maximum execution time and maximum resource requirements are finite and known at compile time. The one exception is the unique lowest priority ‘idle job’, which has no execution time limitation. Typically this ‘idle job’ is not idle, but used for system monitoring, logging, and initiating system correction activities. To save power it may put the CPU in sleep mode waiting for interrupts. A job has two principal states, pending and active. A pending job has not started execution and is either on the ready queue or on the pending jobs queue of a semaphore or data queue, or on the timed jobs queue. Only pending jobs wait on resources. In OCEOS a pending job becomes active when it first starts execution. An active job is either running on the CPU or on the ready queue after being pre-empted. An active job may have to wait on the ready queue for the CPU but never has to wait for any other resource and is never on a pending jobs queue. For each job a record is kept of the time it was created, the time it became active, the execution time, the time it completed, and the number of times it was pre-empted. When a job completes, these job records are used to update the task records. Data can be passed between jobs of the same or different tasks by using statically allocated variables, counting semaphores, or data queues. A job completes with a call to OCEOS and may create another job and place this on the ready queue or on the timed jobs queue.

Ready Queue

This priority queue contains pending and active jobs ordered highest priority first, and within priority earliest arrival first. The scheduler places the first job on this queue into execution if and only if its priority is higher than the system priority ceiling. When it does so it updates the system priority ceiling to the pre-emption threshold of this job. OCEOS places pending jobs on this queue due to an interrupt or trap, or as a result of a system call from the current job, a semaphore signal or queue write, or a timeout. Each job in the Ready Queue is tagged with an ‘origin’ giving how the job came to be placed on the Ready Queue including time and identification of any other queue from which it was removed. There can be multiple occurrences of jobs from the same task on the Ready Queue, each with its origin. Initially this queue contains the Idle Job, which must have the lowest priority.

Timed jobs queue

This is a priority queue of pending jobs and their associated start times, with queue priority based on start times. It is linked to a high priority hardware timer which is set to interrupt at the start time of the first job on the priority queue. When this interrupt occurs this job and other jobs within a timing tolerance of the current time are transferred to the ready queue and the timer is reset to interrupt at the start time of the highest remaining pending job. Jobs transferred to the ready queue have their origins set to timeout and are also removed from the pending list of any counting semaphore or data queue on which they are present.

System Priority Ceiling

The value of this integer is the maximum of the priority ceilings of currently locked mutexes and the pre-emption threshold of the currently executing job. It is not directly accessible to an application.

Scheduling

OCEOS schedules execution instances of tasks, i.e. jobs.

The scheduler places a job into execution if and only if its priority is higher than the system priority ceiling. When it does so it updates the system priority ceiling to the pre-emption threshold of this job.

Scheduling is pre-emptive and based on priority. The scheduler may pre-empt the processor from a lower priority job before that job completes to allow a higher priority job be started.

Jobs with the same priority are scheduled based on the order of their arrival on the ready queue. Time slicing or pre-emption between jobs of the same priority does not occur.

A job with a very short run time can avoid context switch overheads by having a high pre-emption threshold. This also allows pre-emption by some higher priority tasks be prevented. Such jobs are themselves scheduled based on priority as usual.

In OCEOS a wait for a mutex causes the system priority ceiling to be raised to the priority ceiling of the mutex unless it was already higher. The system priority ceiling returns to its previous value when the mutex is signalled.

As a result the scheduler will not start any task that waits on that mutex until the mutex has been returned, as the mutex ceiling is the priority of the highest priority task that uses the mutex.

(Instead of a higher priority job pre-empting a lower priority job but before termination returning control to a lower priority job and waiting for it to return a mutex, the wait for the mutex is done first.)

In OCEOS context switching between tasks is minimized. A lower priority job only resumes execution when a higher priority job has completed, thus allowing stack sharing.

In OCEOS problems such as priority inversion, chained blocking, and deadlocks cannot occur, all tasks can share the same stack thus saving memory, and schedulability analysis is simplified.

Un-necessary blocking can occur for a job that sometimes but not always does a ‘wait’ for a mutex as this job will remain pending if the mutex is locked even if the job would have avoided the ‘wait’.

This un-necessary blocking may seldom occur, is limited in duration, and can be avoided using task chaining.

This approach is based on the Stack Resource Policy extension of the Priority Ceiling Protocol ([BAK91], [Buttazzo2017]).

Many data structures cannot be fully accessed with a single instruction. Errors can result if a job using such a structure is pre-empted by another job that also uses the structure.

To address this OCEOS provides up to 63 mutual exclusion locks (‘mutexes’). Each shared data structure or critical code segment typically is associated with its own mutex.

Any job that uses a shared item should first acquire its mutex from OCEOS, and when finished with the item return the mutex. The number of instructions for which the mutex is held must be finite.

OCEOS allows a mutex be held by only one job at any one time. A job that uses a shared data structure or critical code segment can use an associated mutex to exclude all other jobs.

N.B. An OS does not prevent a shared resource being accessed if no attempt is made to acquire its mutex. The software developer must ensure this is done before the shared resource is used.

Mutexes

In OCEOS all mutexes are defined at compile time and the tasks that use them known. A job obtains and returns a mutex by ‘wait’ and ‘signal’ calls to OCEOS.

Each mutex has a priority ceiling, the priority of the highest priority job that can ‘wait’ for that mutex. This is identified by the application developer and used in defining the mutex.

At compile time it is possible to determine the maximum time for which a mutex can be held by examining the source code of each task that uses the mutex.

If a job simultaneously holds more than one mutex, this must be done in a LIFO nested way,

e.g. Wait1, Wait2, Wait3, Signal3, Signal2, Signal1.

The maximum time across all mutexes for which a mutex can be held gives the maximum time for which a higher priority task can be blocked waiting for a mutex (assuming mutex overlaps are nested).

In OCEOS when a mutex ‘wait’ call succeeds, the current system priority ceiling value is replaced by a value derived from the mutex and the current system priority ceiling.

When a mutex ‘signal’ call succeeds, the previous system priority ceiling is restored and OCEOS automatically reschedules and pre-empts if appropriate.

In OCEOS a pending job only becomes active if its priority is higher than the system priority ceiling. As a result when a job becomes active no mutex that could block it is currently held.

In OCEOS if a job does a mutex ‘wait’ and then a ‘wait’ on the same mutex without an intervening ‘signal’ the second ‘wait’ is treated as a no-operation but is logged and the system state updated.

In OCEOS a ‘signal’ by a job on a mutex not currently held by the job is treated as a no-operation but is logged and the system state updated.

In OCEOS if a job terminates while holding a mutex the ‘signal’ operation is performed automatically for all mutexes held by the job and this is logged and the system state updated.

Mutexes can be read by interrupt handling code but do not provide mutual exclusion for such code. Mutual exclusion in interrupt handling code is achieved by enabling and disabling traps/interrupts.

Inter-job communication

OCEOS provides two types of inter-job communication, counting semaphores and data queues. Semaphores and data queues are handled by OCEOS differently to many other OS. In most OS a job that waits on a zero semaphore or tries to read an empty data queue may be blocked at that point and wait there indefinitely or with an optional timeout. In OCEOS an active job may be pre-empted but cannot otherwise be blocked, so two options are provided in case a counting semaphore is zero when waited on or a data queue is empty when read. One option results in a value always being returned and the job continuing. The returned value will indicate that the semaphore was zero or queue empty, and the job can take this into account. In the other option, if the semaphore is zero or queue empty the job terminates and will restart from its beginning when the semaphore is signalled or queue written, or after an optional timeout. When the job becomes active again after a timeout and again encounters the second option the behaviour is like the first option, the job continues and takes into account that a timeout has occurred. (OCEOS does not support direct event handling by tasks. Similar functionality can be achieved using counting semaphores and interrupt handlers.)

Pending jobs queue

Each counting semaphore and data queue has an associated queue of pending jobs that are waiting for that semaphore or queue. Pending jobs also wait on the timed jobs queue. Pending jobs queues contain only pending jobs and not active jobs, i.e. only jobs that have not yet started execution. A pending job can be on a semaphore pending jobs queue or on a data queue pending jobs queue and also on the timed jobs queue. The amount of time a job can spend on a semaphore or data queue pending jobs queue is of indefinite duration unless it is also on the timed jobs queue. When a job is removed from a semaphore or queue pending jobs queue its origin is set accordingly. It is also removed from the timed jobs queue if present. When a timeout occurs and a job is removed from the timed jobs queue its origin is set accordingly. It is also removed from a semaphore or queue pending jobs queue if present. (Mutexes do not having pending job queues. A pending job waiting for a mutex waits on the ready queue. Such waits are expected to be of finite duration.) If it is necessary to pass data to a later job a job can do this before termination using static variables, data queues or counting semaphores. All jobs on a pending jobs queue are moved to the ready queue in the order of their arrival on the pending jobs queue before any of them is scheduled.

Usually only one job is waiting on a pending jobs queue, if more than one a lower priority job may have to return to the pending jobs queue because a higher priority job or an earlier job has consumed the item.

Counting Semaphores

A counting semaphore has an integer value that is always greater than or equal to zero. This value is set initially when the semaphore is created and modified by atomic wait() and signal() operations. Each counting semaphore has a pending jobs queue of pending jobs waiting for the semaphore value to become greater than zero. Unlike a mutex, which must become available after a finite number of cycles, a counting semaphore may remain unavailable, i.e. at value zero, indefinitely. When a wait operation is performed the semaphore value is decremented unless the semaphore is already zero and an appropriate status code returned. Unlike many other OS, the job that performs the wait operation does not block if the semaphore value is zero. Instead OCEOS provides two options for the wait operation. In the wait_continue() option if the semaphore value is 0 it remains unchanged and an appropriate status code is returned, the job continues taking this returned value into account. In the wait_restart() option if the semaphore is zero the job terminates unless it was started by a timeout and restarts from its beginning when the semaphore is signalled or after an optional timeout. A job that was restarted as a result of a timeout does not terminate with wait_restart() if the semaphore is zero, an appropriate status is returned and the job continues taking this into account. When the semaphore is signalled its value is incremented, and any jobs on its pending jobs queue are transferred to the ready queue and also removed from the timed jobs queue if present. The pending jobs queue order of jobs with the same priority is preserved in this transfer. The scheduler is then activated and may pre-empt the current executing job.

Data Queues

These are FIFO queues of non-null void pointers ordered by arrival time. Each queue has an associated pending jobs queue, and two associated atomic operations, read() and write(). A size() operation gives the number of pointers on the queue, and the create() operation sets the maximum size of the queue. The read() operation returns the first pointer on the queue unless the queue is empty. Unlike many other OS, the job that performs the read operation does not block if the queue is empty. Instead OCEOS provides two options for the read operation to determine what should happen in that case. In the read_continue() option if the queue is empty a null pointer is returned and the job proceeds taking this into account. In the read_restart() option if the queue is empty the job terminates unless it was started by a timeout and will restart from its beginning when the queue is written or after an optional timeout. A job that was started as a result of a timeout does not terminate with read_restart() if the data queue is empty, a null pointer is returned to show there was a timeout and the job continues. A pointer is added to the queue by write(). All jobs on the pending list are moved to the ready queue and also removed from the timed jobs queue if present. The pending queue order of jobs with the same priority is preserved in the transfer. The scheduler is then activated and may pre-empt the current executing job. In case the queue is full, the new entry can be dropped or the oldest entry overwritten, depending on an option chosen when the data queue is created. An appropriate status is returned and a log entry is made if the new entry is dropped.

Timed output queue

OCEOS uses this queue and associated interrupt to allow output of a value to an address be scheduled to occur at a precise time. This queue is a priority queue of pending outputs (output address, value, type, mask, forward tolerance, backward tolerance, time), with queue priority based on output times. It is linked to a high priority hardware timer which is set to interrupt at the time of the first output on the queue. When the interrupt occurs this output and other outputs within the forward timing tolerance of the current time are carried out. The backward tolerance allows the timer interrupt handler perform the output if the current time is later than the requested time by no more than this amount. If set to zero late outputs are not performed. All late outputs are logged and the state variable updated. The high priority timer interrupt is reset after queue additions and removals so as to ensure the interrupt happens at the nearest pending output time. When an output request is added to the queue, the difference between the requested output time and the current next output time is used to set a new interrupt if the requested time is earlier. When this interrupt occurs, all queued outputs within a timing tolerance of the current time are acted upon and the timer is reset to interrupt again at the earliest remaining request. Provision is made for error reporting if the current time is greater than the requested time by more than the allowed tolerance. The GR716 has an interrupt timestamp facility for certain interrupts that can be passed to any job started by the interrupt. An interrupt or a job can calculate the time at which an output should be made and the value of that output If the corresponding mask bits are not all 1s, the address is read and written back with bits not masked left unchanged. The precision of the output time depends on the resolution of the timer, which counts system bus cycles rather than CPU clock cycles.

System State

OCEOS maintains a system state variable that is updated whenever there is anomalous behaviour such as attempting to create more than the allowed number of jobs or writing to a queue that is full.

If this variable is not its normal value, depending on the anomaly OCEOS may call a user defined function to deal with the anomaly.

This typically reads the system log and the system state variable to determine the background to the detected anomaly.

Please consult the header file oceos_codes.h for the system state variable codes These include anomalies such as (the indexes below are not the codes used):

- Uncorrectable error in external memory

- Invalid access memory protection unit 1

- Invalid access memory protection unit 2

- An attempt to start a disabled task

- An attempt to execute a task when its jobs limit is already reached

- Job time from creation to completion exceeds allowed maximum for a task

- Minimum time between job creations is less than the allowed minimum for task

- Ready queue unable to accept job as result of being full

- Mutex wait() when mutex already held

- Mutex signal() when not already held

- Mutex not returned before job terminates

- Attempt to add job to semaphore pending list when list full

- Data queue write when queue already full

- Attempt to add job to queue pending list when list full

- Timed jobs queue write when queue already full

- Timed jobs queue late job transfer to scheduler

- Timed output queue write when queue already full

- Timed output late

- Remove job from ready queue failed

- Job being pre-empted was not executing

System Log

This contains time stamped records of anomalies detected during execution. It is structured as a circular buffer. System calls are provided to initialize the log, set its maximum size, and return the number of entries. OCEOS will call a user defined function when the log is approximately 70% full.

Operational constraints

OCEOS was developed to run on the GR716 microcontroller. The constraints associated with the use of OCEOS are:

- OCEOS is designed to be run on the GR716 Microcontroller. The GR716 contains sufficient internal RAM to run OCEOS.

- OCEOS requires the BCC libraries and can use either the clang or gcc compilers.

- The application must initialise the GR716 hardware using either (i) the BCC board support package or (ii) bespoke initialisation code written by the application developer.

- OCEOS does not provide any device drivers. An example is provided showing how to use the UART device. This can be used as a template for other devices.

- OCEOS provides functions to be used by the application to diagnose error conditions. It is the responsibility of the application to take appropriate action when an error is detected.

Operations basics

OCEOS is provided as an object library, header files(s), and application source files that can be compiled and linked with the standard BCC gcc or clang tools and libraries to provide a real-time multitasking application. OCEOS uses BCC library calls for some system functions. These functions are provided in the BCC board support package for the GR716. The memory map below shows the object modules included for a simple (unoptimized) OCEOS application.

| Section | Address | Description | Size |

|---|---|---|---|

| .rodata | 0x0000000030000000 | asw1.o | 1 |

| .rodata | 0x0000000030000004 | oceos_config.o | 16 |

| .rodata | 0x0000000030000014 | libbcc.a(trap_table_svt_tables.S.o) | 192 |

| .rodata | 0x00000000300000d4 | libbcc.a(trap_table_svt_level0.S.o) | 128 |

| .rodata | 0x0000000030000154 | libbcc.a(bsp_sysfreq.c.o) | 4 |

| .rodata | 0x0000000030000158 | libc_nano.a(lib_a-impure.o) | 4 |

| .data | 0x0000000030000160 | crtbegin.o | 4 |

| .data | 0x0000000030000164 | asw1.o | 4 |

| .data | 0x0000000030000168 | liboceos.a(tasks.o) | 4 |

| .data | 0x000000003000016c | liboceos.a(timed_action.o) | 1 |

| .data | 0x000000003000016d | libbcc.a(int_nest.c.o) | 16 |

| .data | 0x0000000030000180 | libbcc.a(leon_info.S.o) | 16 |

| .data | 0x0000000030000190 | libbcc.a(argv.c.o) | 4 |

| .data | 0x0000000030000194 | libbcc.a(int_irqmp_handle.c.o) | 16 |

| .data | 0x00000000300001a4 | libbcc.a(con_handle.c.o) | 4 |

| .data | 0x00000000300001a8 | libc_nano.a(lib_a-impure.o) | 100 |

| .data | 0x000000003000020c | libc_nano.a(lib_a-__atexit.o) | 4 |

| .bss | 0x0000000030000210 | crt0.S.o | 4 |

| .bss | 0x0000000030000214 | crtbegin.o | 32 |

| .bss | 0x0000000030000234 | asw1.o | 4 |

| .bss | 0x0000000030000238 | oceos_config.o | 296 |

| .bss | 0x0000000030000360 | liboceos.a(initialisation.o) | 8 |

| .bss | 0x0000000030000368 | liboceos.a(interrupt.o) | 1 |

| .bss | 0x000000003000036c | liboceos.a(tasks.o) | 64 |

| .bss | 0x00000000300003ac | liboceos.a(timed_action.o) | 38 |

| .bss | 0x00000000300003d4 | liboceos.a(oceos_timer.o) | 4 |

| .bss | 0x00000000300003d8 | libbcc.a(flush_windows_trap.S.o) | 20 |

| .bss | 0x00000000300003ec | libbcc.a(argv.c.o) | 8 |

| .bss | 0x00000000300003f4 | libc_nano.a(lib_a-__atexit.o) | 140 |

| .bss | 0x0000000030000480 | libc_nano.a(lib_a-__call_atexit.o) | 4 |

| COMMON | 0x0000000030000484 | asw1.o | 16 |

| COMMON | 0x0000000030000494 | oceos_config.o | 704 |

| COMMON | 0x0000000030000754 | liboceos.a(initialisation.o) | 8 |

| COMMON | 0x000000003000075c | liboceos.a(tasks.o) | 52 |

| COMMON | 0x0000000030000790 | libbcc.a(isr.c.o) | 128 |

| .text | 0x0000000031000000 | trap_table_svt.S.o | 72 |

| .text | 0x0000000031000048 | crt0.S.o | 200 |

| .text | 0x0000000031000110 | crtbegin.o | 384 |

| .text | 0x0000000031000290 | asw1.o | 1,296 |

| .text | 0x00000000310007a0 | oceos_config.o | 1,056 |

| .text | 0x0000000031000bc0 | liboceos.a(initialisation.o) | 17,160 |

| .text | 0x0000000031004ec8 | liboceos.a(interrupt.o) | 644 |

| .text | 0x000000003100514c | liboceos.a(tasks.o) | 12,388 |

| .text | 0x00000000310081b0 | liboceos.a(gr716.o) | 28 |

| .text | 0x00000000310081cc | liboceos.a(logging.o) | 4,312 |

| .text | 0x00000000310092a4 | liboceos.a(timed_action.o) | 9,380 |

| .text | 0x000000003100b748 | liboceos.a(oceos_timer.o) | 1,504 |

| .text | 0x000000003100bd28 | liboceos.a(oceos_interrupt_trap_handler.o) | 568 |

| .text | 0x000000003100bf60 | liboceos.a(FDIR.o) | 756 |

| .text | 0x000000003100c254 | liboceos.a(dataq.o) | 8,560 |

| .text | 0x000000003100e3c4 | liboceos.a(semaphore.o) | 6,628 |

| .text | 0x000000003100fda8 | libbcc.a(trap_table_svt_tables.S.o) | 4 |

| .text | 0x000000003100fdac | libbcc.a(reset_trap_svt.S.o) | 100 |

| .text | 0x000000003100fe10 | libbcc.a(window_trap.S.o) | 176 |

| .text | 0x000000003100fec0 | libbcc.a(interrupt_trap.S.o) | 432 |

| .text | 0x0000000031010070 | libbcc.a(flush_windows_trap.S.o) | 136 |

| .text | 0x00000000310100f8 | libbcc.a(sw_trap_set_pil.S.o) | 44 |

| .text | 0x0000000031010124 | libbcc.a(trap.c.o) | 204 |

| .text | 0x00000000310101f0 | libbcc.a(isr_register_node.c.o) | 188 |

| .text | 0x00000000310102ac | libbcc.a(isr_unregister_node.c.o) | 184 |

| .text | 0x0000000031010364 | libbcc.a(int_disable_nesting.c.o) | 80 |

| .text | 0x00000000310103b4 | libbcc.a(int_enable_nesting.c.o) | 136 |

| .text | 0x000000003101043c | libbcc.a(init.S.o) | 8 |

| .text | 0x0000000031010444 | libbcc.a(leon_info.S.o) | 152 |

| .text | 0x00000000310104dc | libbcc.a(dwzero.S.o) | 44 |

| .text | 0x0000000031010508 | libbcc.a(copy_data.c.o) | 72 |

| .text | 0x0000000031010550 | libbcc.a(lowlevel.S.o) | 140 |

| .text | 0x00000000310105dc | libbcc.a(lowlevel_leon3.S.o) | 72 |

| .text | 0x0000000031010624 | libbcc.a(int_irqmp.c.o) | 592 |

| .text | 0x0000000031010874 | libbcc.a(int_irqmp_get_source.c.o) | 72 |

| .text | 0x00000000310108bc | libbcc.a(int_irqmp_init.c.o) | 8 |

| .text | 0x00000000310108c4 | libbcc.a(bsp_con_init.c.o) | 132 |

| .text | 0x0000000031010948 | libc_nano.a(lib_a-atexit.o) | 28 |

| .text | 0x0000000031010964 | libc_nano.a(lib_a-exit.o) | 72 |

| .text | 0x00000000310109ac | libc_nano.a(lib_a-memcpy.o) | 52 |

| .text | 0x00000000310109e0 | libc_nano.a(lib_a-setjmp.o) | 100 |

| .text | 0x0000000031010a44 | libc_nano.a(lib_a-__atexit.o) | 324 |

| .text | 0x0000000031010b88 | libc_nano.a(lib_a-__call_atexit.o) | 420 |

| .text | 0x0000000031010d2c | libbcc.a(_exit.S.o) | 16 |

| .text | 0x0000000031010d3c | crtend.o | 72 |

Comparison of the same task optimised with BCC compiler options -Oz and -mrex for OCEOS modules is shown below.

| Section | Description | -O0 Size | -Oz -mrex Size | % |

|---|---|---|---|---|

| .rodata | asw1.o | 1 | 1 | 100% |

| .rodata | oceos_config.o | 16 | 16 | 100% |

| .data | asw1.o | 4 | 4 | 100% |

| .data | liboceos.a(tasks.o) | 4 | 4 | 100% |

| .data | liboceos.a(timed_action.o) | 1 | 0% | |

| .bss | asw1.o | 4 | 4 | 100% |

| .bss | oceos_config.o | 296 | 296 | 100% |

| .bss | liboceos.a(initialisation.o) | 8 | 8 | 100% |

| .bss | liboceos.a(interrupt.o) | 1 | 1 | 100% |

| .bss | liboceos.a(tasks.o) | 64 | 52 | 81% |

| .bss | liboceos.a(timed_action.o) | 38 | 38 | 100% |

| .bss | liboceos.a(oceos_timer.o) | 4 | 0% | |

| COMMON | asw1.o | 16 | 16 | 100% |

| COMMON | oceos_config.o | 704 | 704 | 100% |

| COMMON | liboceos.a(initialisation.o) | 8 | 8 | 100% |

| COMMON | liboceos.a(tasks.o) | 52 | 52 | 100% |

| .text | asw1.o | 1,296 | 518 | 40% |

| .text | oceos_config.o | 1,056 | 170 | 16% |

| .text | liboceos.a(initialisation.o) | 17,160 | 6,572 | 38% |

| .text | liboceos.a(interrupt.o) | 644 | 240 | 37% |

| .text | liboceos.a(tasks.o) | 12,388 | 4,714 | 38% |

| .text | liboceos.a(gr716.o) | 28 | 12 | 43% |

| .text | liboceos.a(logging.o) | 4,312 | 1,484 | 34% |

| .text | liboceos.a(timed_action.o) | 9,380 | 2,760 | 29% |

| .text | liboceos.a(oceos_timer.o) | 1,504 | 396 | 26% |

| .text | liboceos.a(oceos_interrupt_trap_handler.o) | 568 | 568 | 100% |

| .text | liboceos.a(FDIR.o) | 756 | 240 | 32% |

| .text | liboceos.a(dataq.o) | 8,560 | 3,350 | 39% |

| .text | liboceos.a(semaphore.o) | 6,628 | 2,474 | 37% |

| Total | 65,501 | 24,702 | 38% |

Operations manual

General

OCEOS is structured as a set of managers each providing OCEOS service calls.

The activities supported by these managers are:

| Initialization manager : | initializing the hardware and OCEOS itself |

| Task manager : | handling tasks and jobs, including scheduling |

| Interrupt manager : | setting up trap handlers |

| Clock manager : | providing time of day, dates, and alarms |

| Timer manager : | interval timing and alarms, timed outputs and jobs |

| Mutex manager : | mutual exclusion management |

| Semaphore manager : | counting semaphore management |

| Data queue manager : | data queue management |

The directives provided by these managers are described in the following sections.

Set‐up and initialisation

Setup for the use of OCEOS requires the user to prepare the development environment. At a minimum this requires the installation for compilers, debug tool, editors, …etc.

Getting started

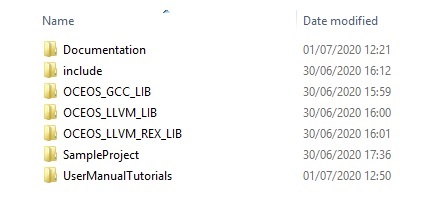

Files provided with OCEOS

Unpack the OCEOS software into an appropriate folder on the computer. The folders are as follows:

- Documentation OCEOS User Manual and Quick Reference.

- include Include files required by the application

- OCEOS_GCC_LIB oceos.a object library for applications to be built with gcc

- OCEOS_LLNM_LIB oceos.a object library for applications to be built with llvm (32-bit)

- OCEOS_LLVM_REX_LIB oceos.a object library for applications to be built with llvm (16-bit REX mode)

- Sample Project c sources, include files, and make files for an example project

- UserManualTutorials c sources, include files, and make files for User Manual tutorials and application example

The source and build files associated with the example application referred to in this manual are located in the folder <installation-folder> \UserManualTutorials\asw.

asw.h

Simple application header.

asw.c

Simple application example. This file is a self-contained example of an OCEOS application including tasks, mutexes, semaphores, and dataqueues.

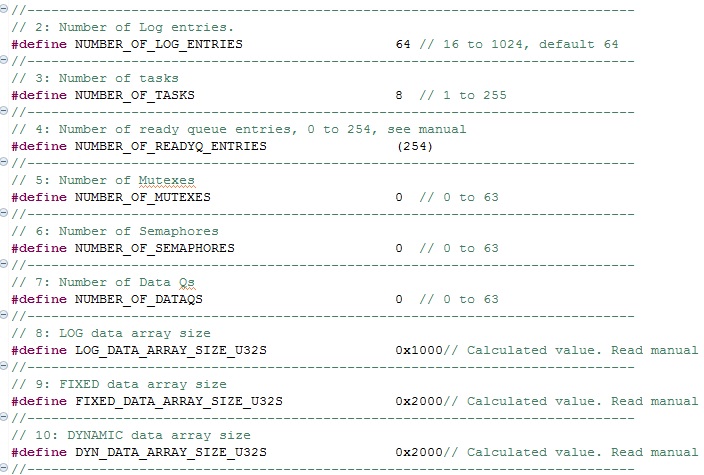

oceos_config.h

This is the OCEOS application header file where the developer specifies the number of tasks, mutexes, sempahores, dataqs, log entries, and readyq entries. These numbers are used to allocate the memory required for storing oceos variables. The definitions to be specified follow.

- The application must create the number of tasks, mutexes, semaphores or dataqs specified in this header, otherwise an error will be returned from oceos_init_finish().

- LOG_DATA_ARRAY_SIZE_U32S - see section OCEOS Data Areas

- FIXED_DATA_ARRAY_SIZE_U32S - see section OCEOS Data Areas

- DYN_DATA_ARRAY_SIZE_U32S - see section OCEOS Data Areas

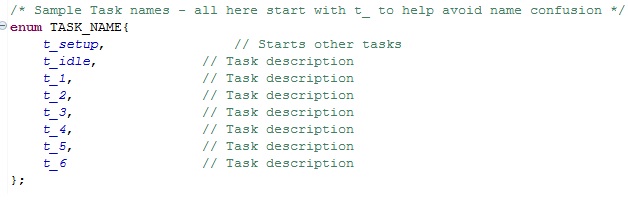

- Enumerated types are recommended for names of tasks, mutexes, semaphores and dataqs. This header file contains some examples.

oceos_config.c

This file contains the OCEOS data areas and the following functions:

- int application_init() This function initialises the oceos data area.

- void oceos_on_error(void* ptr) The developer should code this function to implement actions in case of a system error.

- void oceos_on_full_log(void* ptr)

The developer should code this function which is called when the system log is two-thirds full.

makefile & makefile.mk

These file are provided to facilitate building the asw application. Some local edits are required to point to the local compiler folders.

Building the example application

Download and install the BCC2 compiler from here.

Use the Makefile provided or use the following commands to compile and link the application.

sparc-gaisler-elf-clang -I. -Iinclude -IC:/opt/bcc-2.1.1-llvm/sparc-gaisler-elf/bsp/gr716/include -qbsp=gr716 -mcpu=leon3 -Wall -c asw.c -o asw.o

sparc-gaisler-elf-clang -I. -Iinclude -IC:/opt/bcc-2.1.1-llvm/sparc-gaisler-elf/bsp/gr716/include -qbsp=gr716 -mcpu=leon3 -Wall -c oceos_config.c -o oceos_config.o

sparc-gaisler-elf-clang asw.o oceos_config.o -o asw-gr716.elf -qbsp=gr716 -mcpu=leon3 -qsvt -qnano -Wall -LC:/projects/oce/oceos/trunk/oceos_product/oceoc/build/ -loceos -Wl,-Map=asw-gr716.map

Notes:

- sparc-gaisler-elf-clang Ensure the path to the compiler has been configured. The llvm clang compiler is recommended as the OCEOS library was compiled with it.

- -I. -Iinclude -I<bcc-install-folder>/bcc-2.1.1-llvm/sparc-gaisler-elf/bsp/gr716/include This -I option allows paths to include files to be passed to the compiler.

- -qbsp=gr716 This option specifies the compiler gr716 board support package.

- -mcpu=leon3 This option specifies the processor type.

- -Wall Display all warning messages.

- -c Compile only (linking not required).

- -o <filename> Specify the output filename.

- -qsvt Use the single-vector trap model described in “SPARC-V8 Supplement, SPARC-V8 Embedded (V8E) Architecture Specification”.

- -qnano Use a version of the newlib C library compiled for reduced foot print. The nano version implementations of the fprintf() fscanf() family of functions are not fully C standard compliant. Code size can decrease with up to # KiB when printf() is used.

- -L<path-to-oceos-library-file> Specify the path where liboceos.a is located.

- -loceos Instructs linker to use library liboceos.a

- -Wl,-Map=asw-gr716.map Instructs the linker to generate a map file with the name asw-gr716.map. This optional map file details the memory map of all functions and data.

- Consult the BCC manual for other options.

The application executable (in this case asw.elf) can now be downloaded and executed on the target GR716 hardware. A debug tool such as DMON or GRMON is required for this. DMON provides specific support of OCEOS.

Important Note Up to BCC version 2.1.2 bcc_inline.h needs to be changed as it does not function on the GR716 as expected. The file is located in …\bcc-2.x.x-llvm\sparc-gaisler-elf\bsp\gr716\include\bcc\ for llvm and …\bcc-2.x.x-gcc\sparc-gaisler-elf\bsp\gr716\include\bcc for gcc.

/* OCEOS comment out until BCC fix #ifdef __BCC_BSP_HAS_PWRPSR #include <bcc/leon.h>

static inline int bcc_set_pil_inline(int newpil)

{

uint32_t ret;

__asm__ volatile (

"sll %4, %1, %%o1\n\t"

"or %%o1, %2, %4\n\t"

".word 0x83882000\n\t"

"rd %%psr, %%o1\n\t"

"andn %%o1, %3, %%o2\n\t"

"wr %4, %%o2, %%psr\n\t"

"and %%o1, %3, %%o2\n\t"

"srl %%o2, %1, %0\n\t"

: "=r" (ret)

: "i" (PSR_PIL_BIT), "i" (PSR_ET), "i" (PSR_PIL), "r"

(newpil)

: "o1", "o2"

);

return ret;

}

#else

*/

#include <bcc/leon.h>

static inline int bcc_set_pil_inline(int newpil)

{

register uint32_t _val __asm__("o0") = newpil;

/* NOTE: nop for GRLIB-TN-0018 */

__asm__ volatile (

"ta %1\nnop\n" :

"=r" (_val) :

"i" (BCC_SW_TRAP_SET_PIL), "r" (_val)

);

return _val;

}

/* For OCEOS

#endif

*/

If bcc_inline.h is not modified traps may be left disabled or in REX mode an illegal opcode trap is generated. [GRLIB]

Mode selection and control

OCEOS is provided as an object library with header files for applications wishing to use it. There are two primary modes of operation on the GR716 as follows:

- SPARC V8 32-bit Mode

32-bit mode is the standard instruction word size on the SPARC V8. An OCEOS object library is provided for 32-bit mode (gcc & llvm) - REX 16-bit mode

REX mode allows the GR716 to execute 16-bit instructions. Applications running in rex mode occupy a smaller memory footprint. The compiler option -mrex should be used and -Oz for the smallest footprint.

It is an application design choice as to which mode is used.

Normal operations

This section describes the OCEOS directives used to in most applications. See file asw.c for example.

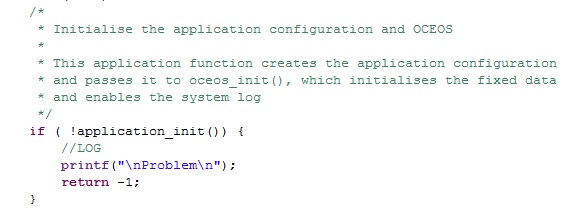

The calls to get OCEOS running are:

- application_init – uses oceoc_init to Initialise fixed data and start system time and log

- oceos_task_create - Create task setting priority, no of jobs, ..etc.

- oceos_init_finish – Initilise dynamic data area

- oceos_start – Start the scheduler and pass control to first task

After steps 1 to 4 above tasks implement application functionality. If mutexes, semaphores, dataqs and/or timed actions are required they also are created at step 2.

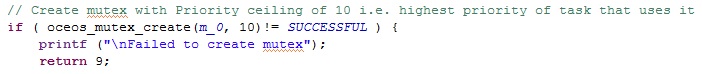

Note: it is mandatory to create the number of mutexes, semaphores, and dataqs declared otherwise oceos_init_finish() will return an error.

application_init()

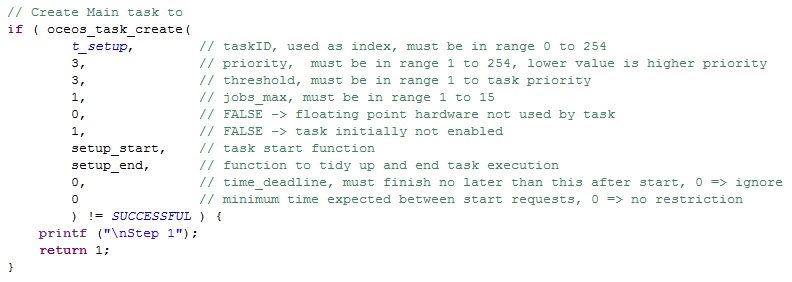

oceos_task_create (…)

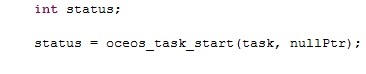

oceos_task_create prepares a task for execution. In the case above the parameters are:

- t_setup is an integer setup in the enumerated type in oceos_config.h. t_setup is used to identify this task to OCEOS thereafter.

- 3 is the priority allocated to this task. 1 is the highest and 254 the lowest priority

- 3 is the priority threshold. The scheduler will only allow tasks higher than this priority to pre-empt task t_setup.

- 1 is the maximum number of jobs for task t_setup. 15 is the maximum number of jobs that can be ready-to-run for any task.

- 0 indicating that floating point is not used by this task. Enabling floating point for a task causes the scheduler to store all floating point registers on the stack when the job is pre-empted. If the job is not using floating point this causes inefficiency.

- 1 causes the task to be enabled on when oceos is started.

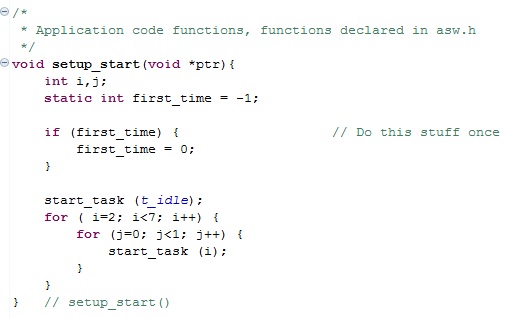

- setup_start is the name of the function to be executed for this task. The code for setup_start is in asw.c and the function declared in asw.h.

- setup_end is the function called when task t_setup terminates.

- 0 instructs the scheduler that there is no maximum execution time for this task. If a value (in microseconds) is specified the scheduer will raise an error if the task exceeds this execution time.

- 0 instructs the scheduler that there is no minimum time between executions of this task. If a time in microseconds is specified the scheduler will raise an error if this value is violated.

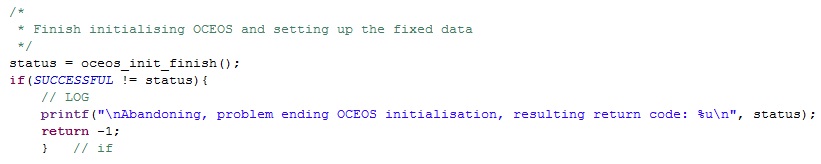

oceos_init_finish()

This function must be called before OCEOS is started. It completes the initialisation of OCEOS data for the application.

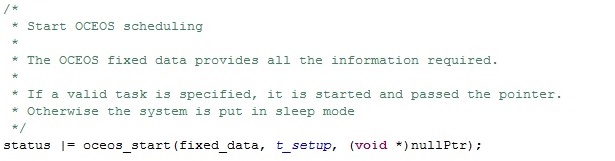

oceos_start()